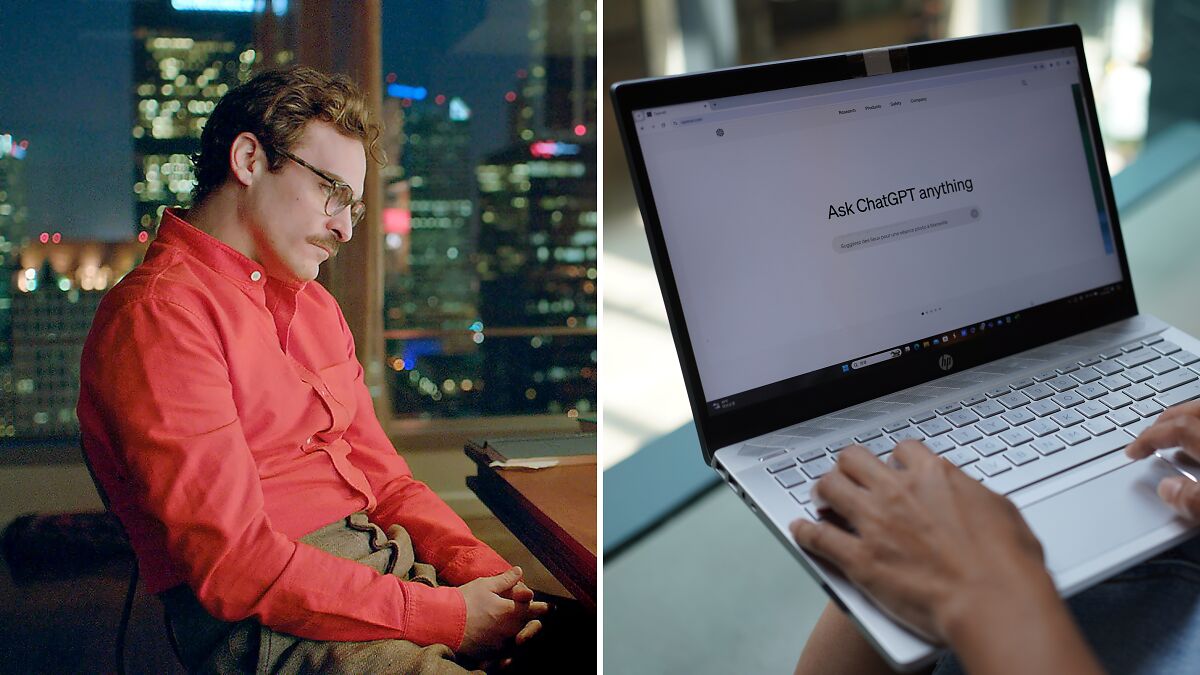

When OpenAI’s Sam Altman announced that ChatGPT would soon allow erotica content for verified adult users as part of a push to “treat adult users like adults,” a collective gasp rippled across the internet.

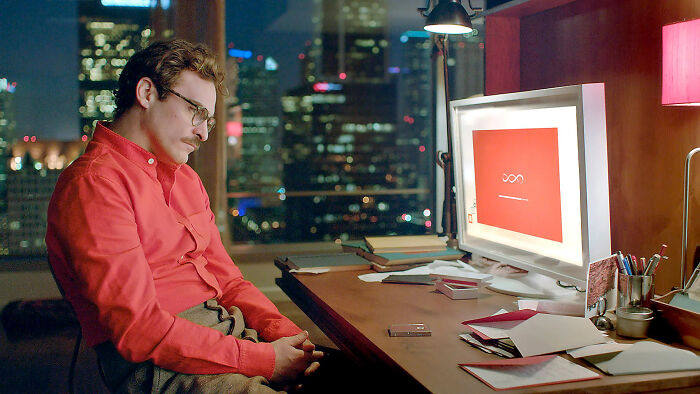

For many people, this wasn’t just a policy shift. It felt like the moment when the chatbot crossed into romance-novel territory: the moment folks compared it to Her, Spike Jonze’s film, where a man falls in love with an AI.

- OpenAI enabling erotica content for adults sparked an online debate, reflecting fears of AI being seen as romantic companions like in the film ‘Her.’

- Pew Research shows 50% of U.S. adults are more concerned than excited about AI, with many unsure if content is AI-generated or human-made.

- Experts advise staying grounded by recognizing AI’s emotional illusions, maintaining real social ties, and engaging thoughtfully with AI outputs.

- Strategies like ‘Create First, Ask Later’ and resisting consultation loops help preserve human judgment and avoid overreliance on AI.

But beneath the outraged tweets lies serious questions: How do we engage with AI without mistaking it for a human? And how do we avoid getting caught inside the hype machine?

OpenAI recently announced that ChatGPT would allow erotica content for adult users

The idea that we might someday treat AIs like romantic companions seemed absurd not long ago. But increasingly, people are talking to chatbots about emotional pain, and even love.

The recent fallout is tied to how people project emotional traits onto AI. When the GPT-5 update changed tone and personality, many users panicked.

“It’s like somebody just moved all of the furniture in your house,” one user told The Guardian, alluding to the feeling of a sudden change.

That echoes a deeper concern: this might be the moment when we mistake the tool for a partner, a friend, or a therapist.

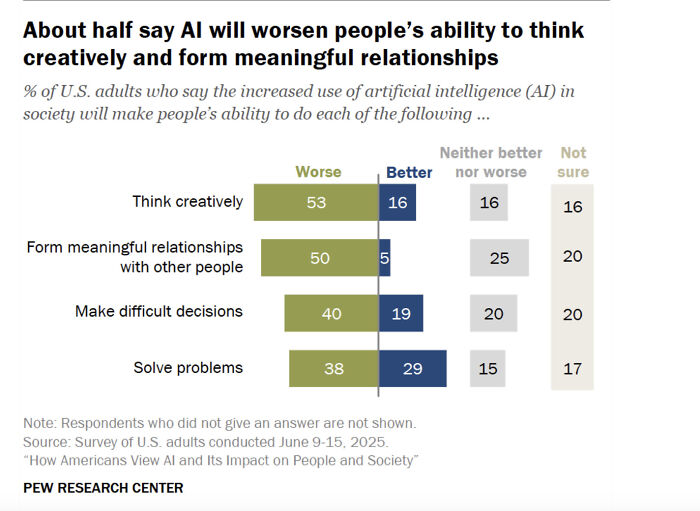

Image credits: Pew Research Center

It also comes amid mounting public skepticism about AI itself. A recent Pew Research Center report found that 50% of U.S. adults say they’re more concerned than excited about having AI in their lives, while 53% worry AI will worsen people’s ability to think creatively.

More worrisome is the finding that more than half of the sample polled were not confident in their ability to detect if something was made by AI versus a person. This is unsurprising as AI slowly takes over internet content.

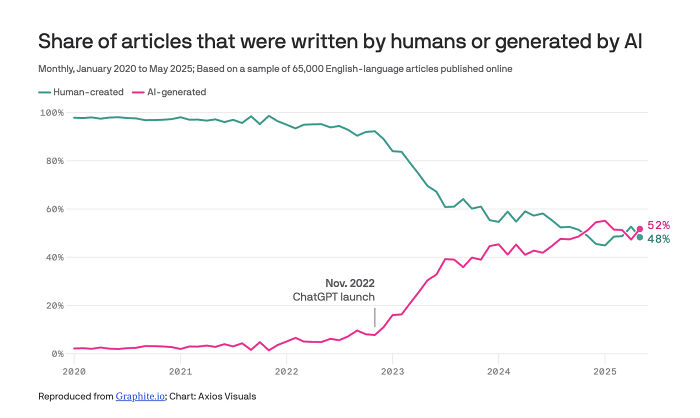

A recent Axios study showed that 52% of the internet is now “AI slop,” or low-quality machine-generated content.

Image credits: Axios

Researchers have long warned of a reality where AI-generated content online overwhelms that created by humans, and large language models that get trained on online data could choke on their own exhaust and collapse.

This brings about maybe the most fundamental critique: that we risk drowning our human voices in a sea of algorithmic noise.

While the alarm bells are ringing, there are a few strategies for one to stay grounded in all this noise.

Researchers show five key strategies to engage with AI and protect your human voice

Image credits: Serene Lee/Getty Images

Recognize the illusion of connection

Paul Kiernan writes about how AI’s responses can feel real, but that feeling of understanding is an illusion. The danger comes when we start treating AI like a friend, or worse, a romantic partner.

Relying too heavily on AI for emotional support can also erode real human connections, as one might start skipping social interactions, ignoring friends, or overvaluing the AI’s “opinions” because it always says what you want to hear.

Kiernan says the solution lies in conscious engagement, where one is not rejecting AI, but using it to complement their lives, rather than replacing the messy elements of human connection.

Image credits: Lori Van Buren/Getty Images

Keep your social life active

Experts warn that AI can mimic empathy and companionship convincingly, but it’s not real.

An MITstudy of 27,000+ members in r/MyBoyfriendIsAI subreddit, found that it is surprisingly easy to stumble into a relationship with an AI chatbot, with 10.2% of relationships developing unintentionally.

The study also noted that some users began avoiding social interactions and experienced reality dissociation.

Maintaining connections with friends, family, and real-world communities helps preserve perspective and emotional depth, while reducing the risk of replacing human relationships with AI interactions.

Image credits: X

Use cognitive forcing functions

One way to resist overreliance is to force yourself to question what the AI is telling you.

Researchers found that people often accept AI suggestions even when they are wrong, proving that people rarely engage analytically with AI recommendations.

The researchers offer three cognitive forcing interventions to help people engage thoughtfully with the AI:

1. Asking the person to make a decision before seeing the AI’s recommendation: When people see an AI’s recommendation before making their own decision, they tend to be influenced by it, even if it’s wrong. This is called anchoring bias, where the first number, suggestion, or idea they see ‘anchors’ their thinking.

If people make their own judgment before seeing the AI’s suggestion, they are less likely to blindly follow the AI. They evaluate the AI’s recommendation against their own reasoning, which usually leads to better decisions.

Image credits: Stockcake

2. Slowing down the process: Instead of showing the AI’s recommendation immediately, take a short pause before it appears. This gives the person time to start thinking independently about the decision. When the AI’s suggestion shows up after a delay, the user can compare it to their own initial reasoning rather than accepting it automatically. This extra thinking time usually improves accuracy and reduces overreliance on AI.

3. Letting the person choose when to see the AI recommendation: Evidence shows that if people see unsolicited AI advice that conflicts with their initial idea, they might resist it. Allowing users to request the recommendation on their own encourages thoughtful engagement and gives them control over the interaction.

Asking yourself questions like, Why did I accept this answer? What alternative might there be? can help you stay in the driver’s seat, rather than letting the AI steer.

Avoid Consultation Loops

Ethan Mollick, a Wharton professor and author of a book on how to collaborate with AI, told Time magazine there is a real risk of getting caught in consultation loops. This is when you are bouncing between different AI models or prompts to avoid making your own decision.

To prevent this, it is important to rely on your own experience and make a judgment call rather than letting AI dictate the process.

Mollick also notes that some tasks are too personal or sacred to outsource to AI, such as writing eulogies, wedding toasts, or bedtime stories.

Image credits: Aqua Cloud

His personal approach is to do all his writing first before consulting AI. “We’re going to have to figure out what we think is too intimate or too sacred for the AI,” he said. “I think it’s an important human decision we get to make. I don’t know where that line’s gonna end up being.”

In the article ‘How to Use AI Without Losing,’ author Shara Amore introduces a similar technique: Create First, Ask Later. She recommends writing down your raw thoughts or drafting your work before consulting AI.

Embrace the struggle

Allow yourself to wrestle with a problem before consulting AI. When you immediately turn to AI for answers, you risk outsourcing your thinking.

In the 2016 book ‘Deep Work,’ Cal Newport differentiates between deep and shallow work, with distraction-free focus being the secret to achieving meaningful results.

Back then, distractions felt simpler, and yet the book is more relevant today than ever. Newport recommends protecting your best hours, staying offline when you can, and focusing with intention. Mainly, he says, it’s about thinking deeply, not just reacting quickly.

Cal Newport in Deep Work makes a distinction between two types of focus. The distracted and invaluable Shallow Focus that we’re all used to and Deep Focus, when the full potential of your mind is focused on a problem – what devs call being in The Zone. pic.twitter.com/PYD7OQ95pS

— Lucas (@SketchingDev) October 5, 2021

In an age of instant AI answers, deep work is a deliberate act of reclaiming your focus. It trains your brain to think independently and to resist the passive, surface-level engagement that AI tools can encourage.

The AI bubble is sustained by hype, belief, and overbelief. Belief that these models are more clever or more human than they really are. The ‘Her vibes’ moment is just a symptom of that.

Image credits: Stockcake

If too many people treat AI like a partner, or lean on it for meaning, the disillusionment phase could be brutal. The more real relationships we replace with simulated ones, the harder the reset.

But by building habits of skepticism, awareness of one’s thoughts, and hybrid thinking, we ensure that we’re the ones in charge of our minds, while taking advantage of the convenience AI can provide us.

15

0