U.S. President Donald Trump’s latest executive order says the U.S. federal government will only procure large language models (LLMs) that are “neutral” and “nonpartisan.”

The Trump administration has previously passed orders dismantling diversity, equity, and inclusion (DEI) initiatives in different sectors, and now it is doing the same for tech.

- A Donald Trump executive order mandates the government to procure only neutral, nonpartisan large language models, rejecting DEI influences as ideological bias.

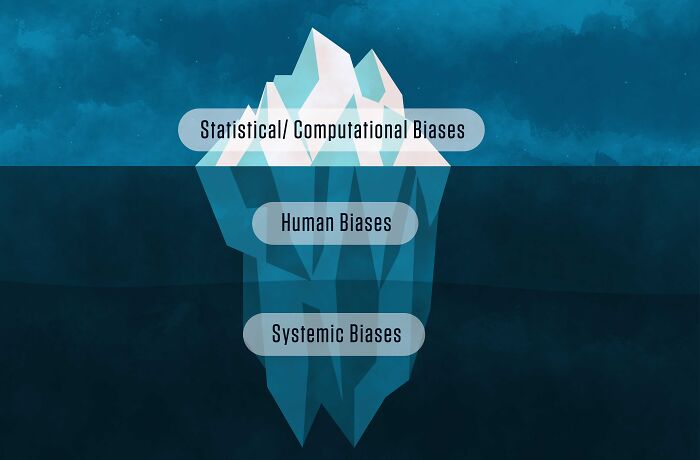

- Experts say defining and achieving AI neutrality is nearly impossible due to subjective biases and inherently biased internet data.

- LLMs are 'black boxes' using billions of parameters, making it difficult even for creators to explain why specific outputs are generated.

- Government mandates for AI neutrality differ from private sector practices, forcing companies to comply with strict federal procurement rules.

- Removing AI bias is both a technical and political challenge, complicated by the defunding of institutions responsible for AI safety research.

The order calls DEI a “pervasive and destructive bias” and prohibits models that promote these “ideological dogmas.”

A new executive order says the government will only procure LLMs that are neutral and nonpartisan

Image credits: Andrew Harnik/Getty Images

The order, titled ‘Preventing Woke AI in the Federal Government,’ is part of the broader ‘Winning the AI Race: America’s AI Action Plan.’

It states, “LLMs shall be neutral, nonpartisan tools that do not manipulate responses in favor of ideological dogmas such as DEI,” adding that LLM creators should only encode ideological judgments into a language model’s outputs that are prompted by users.

But while the idea of politically neutral AI may sound simple, experts say it is extremely difficult to define and achieve.

Speaking anonymously to BP Daily, an AI data researcher in Silicon Valley said the biggest challenge with this AI policy is agreeing on what neutrality even means.

Image credits: Freepik

“Some statements are more factual, like an accident report. If an Air Indiaplane crashed and people died, that’s something most people can agree on,” the researcher said.

“But until the full accident report is out, it’s harder to agree on why it happened. That’s a gray area. When even humans can’t agree on these gray areas, it’s harder to align or tune LLMs to be neutral.”

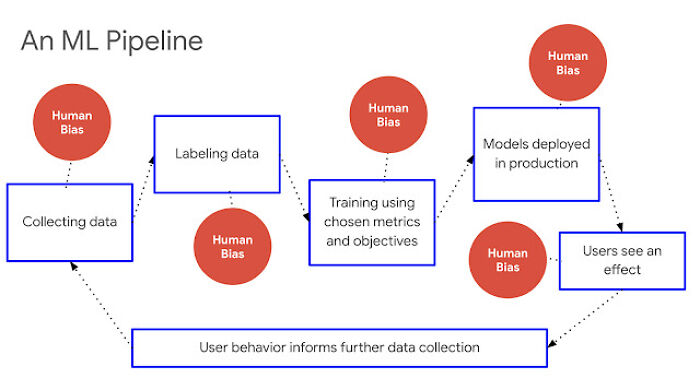

According to the researcher, large AI models are “neural networks” that don’t inherently know anything. “They are a function of data in output. So these models don’t know anything by themselves. The bias they output depends on the data they’re trained on,” they said.

“It’s quite possible to have a de-biased model, but only if the data you feed it is de-biased. The internet itself is inherently biased, so without very aggressive filtering, some bias will always leak through.”

The biggest challenge with AI policy is agreeing on what neutrality even means, a Silicon Valley data researcher told BP Daily

Image credits: Nist.gov

Nicol Turner Lee of the Brookings Institution also notes that bias is subjective and holds different meanings for people.

In addition, LLMs are influenced by developers whose worldviews and factors affect their reasoning behind the model’s design.

“It is highly improbable to train AI on data that has not otherwise been impacted by the lived experiences of people and their communities,” Lee noted.

It will also be a huge effort to apply “truth” to AI systems, given that online information is inherently biased.

Even with the best data, another obstacle is the “black box” nature of modern AI. LLMs use billions of parameters in ways that are often not fully transparent, even to their creators. That makes it difficult to explain why a model generated a specific answer.

Image credits: Freepik

The data researcher who spoke to BP Daily stressed that safety testing is already routine at major AI labs. “We run tons of evaluations and benchmarks before a model is released.”

Special benchmarking, such as in the case of unbiased AI, includes testing the model on questions and ranking the output of the model.

“So this might be the set of truths that you can think are more universally agreeable. You get the LLM to give outputs, and you can rank them on that,” the researcher said.

It’s technically feasible to design benchmarks for this when it comes to bias and neutrality. It involves taking a leaderboard approach where developers feed models a series of factual questions with known answers and rank them based on accuracy.

It’s important to note that this depends on having agreed-upon truths in the first place. For politically or culturally contentious topics, that agreement may be impossible to reach.

The Trump order explicitly labels DEI concepts—including unconscious bias, systemic racism, and intersectionality—as ideological positions to be removed from government AI.

Trump’s order labels DEI concepts as ideological positions to be removed from government AI

Image Credits: Google Research

Sorelle Friedler of the Brookings Institution described the measure as “remarkably heavy-handed” for a plan that otherwise promotes deregulation.

Friedler notes that private AI companies already filter or block outputs, and they do so based on corporate goals and legal obligations. In this case, the government mandates these decisions, and companies are required to comply with them.

In mid-July, the U.S. Department of Defense awarded government contracts worth $200 million to AI companies Anthropic, Google, OpenAI, and xAI, with the goal of accelerating the defense agency’s adoption of advanced AI capabilities.

CNBC reported that the wording of the anti-woke AI order aligns with the messaging of xAI, owned by former Trump advisor and megadonor Elon Musk.

Image credits: Tom Brenner/Getty Images

Musk’s AI chatbot Grok has been advertised as “anti-woke” and “maximally truth-seeking,” but ends up reflecting Musk’s views while answering certain controversial questions.

Grok has also faced high-profile incidents, including producing antisemitic statements and praising Adolf Hitler.

Yet the Trump administration’s AI plan urges caution in regulating AI in the private sector, but imposes strict rules only on federal procurement. Theoretically, this does not constrain commercial AI companies.

Image credits: Kevin Carter/Getty Images

MAGA champion Rep. Marjorie Taylor Greene has spoken out against Trump’s plan to expand AI capacities in the U.S. “My deep concerns are that the EO demands rapid AI expansion with little to no guardrails and breaks,” she wrote on X.

“We have no idea what AI will be capable of in the next 10 years and giving it free rein and tying states hands is potentially dangerous,” she said in June.

The Trump administration’s recent defunding policies also undermine the very institutions that could help deliver trustworthy AI. For instance, the National Science Foundation, tasked in the plan with leading AI safety research, has been defunded and politicized, with grants canceled based on political criteria and staff fired.

The bottom line is that removing all bias from AI is not just a technical challenge, it’s a political one. As long as the definition of neutrality depends on human judgment, it will be shaped by whoever holds the power to decide.

Trump knows more about AI (Authentic Ignorance) than anyone, especially all his Low Information Alternative Reality Sսckеrs (LIARS).

Trump knows more about AI (Authentic Ignorance) than anyone, especially all his Low Information Alternative Reality Sսckеrs (LIARS).

16

1