Of all the failed promises Elon Musk has made, taking down child sexual abuse material from X is perhaps the most futile.

Repeated legal cases that X is fighting against child abuse material claims (CSAM) are only the tip of this iceberg.

The fact that just within a month of acquiring Twitter, now X, Musk fired many of its content moderators and hired contractors who tracked harmful content, should have raised eyebrows about how true Musk would stay to his promise.

- Elon Musk fired many Twitter content moderators after acquiring X, weakening efforts against child sexual abuse material (CSAM) on the platform.

- Research shows thousands of accounts share CSAM on X, exploiting bulk account creation and hashtag hijacking.

- Although X has increased removals and introduced new detection tech, CSAM persists due to systemic platform flaws.

Despite Elon Musk’s promise to take down child sexual abuse material from X, the platform houses big CSAM operations

Image credits: Beata Zawrzel/Getty Images

Musk also ousted the then-head of Twitter’s trust and safety department, Yoel Roth, suggesting he is an advocate for child sexualization. Musk’s claim that Roth was sympathetic to child sexualization was widely debunked.

Roth was part of the team that banned U.S. President Donald Trump from Twitter.

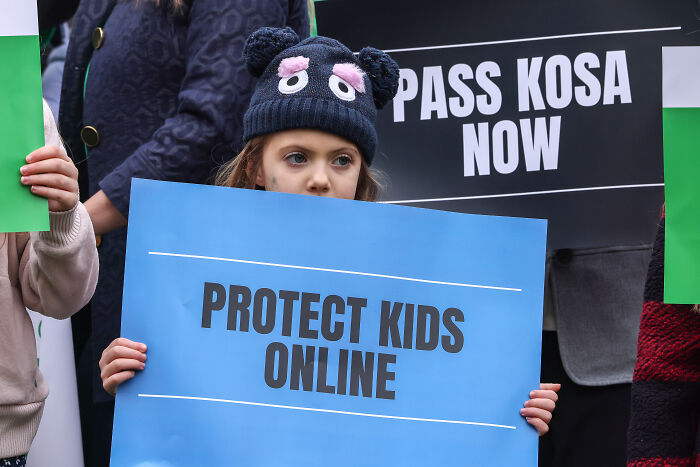

In 2023, X was issued a $610,500 fine under Australia’s Online Safety Act for failing to crack down on child sexual abuse material. As you read this, X is under investigation by the European Commission for an alleged child abuse network on the platform.

In the U.S., a federal appeals court has revived a case against X, alleging it was negligent in its handling of CSAM and failed to promptly report a video consisting of explicit images of underage boys.

Image credits: STR/Getty Images

It also highlights how X “slow-walked its response” to reports—a pattern that was highlighted under Australia’s Online Safety Act and by researchers.

But the case was originally filed in 2021, before Musk took over Twitter. X has since repeatedly promised “zero tolerance for child sexual abuse material” and that it is a “top priority” to tackle those who exploit children.

Just this month, BBC Investigates shared the story of a victim of child sexual abuse, begging Musk to stop the links that are offering images of her being abused on X. Her images are being sold online, in plain sight, on an innocent social media platform — X.

Image credits: Jemal Countess/Getty Images

The way this works is the images are available on the dark web, with links to it being publicized on X. An investigation by nonprofit group Alliance4Europe identified more than 150 accounts sharing CSAM videos over only three days.

Saman Nazari, Lead Researcher at Alliance4Europe, says this number could be from thousands to millions in reality. “They are continuously activating more and more accounts. We’re probably talking about millions of posts going into these hashtags,” Nazari told BP Daily.

The CSAM industry is a huge economy in itself. A chilling report by the Global Child Safety Institute, Childlight, found the industry of sexual exploitation and abuse of children to be a multibillion-dollar global trade.

💲Clicks for cash💲

🧑💻Child abuse is a multi-billion-dollar industry

🛡️To safeguard children we must

✅Fine companies whose platforms enable abuse ✅Educate young people about online dangers ✅Regulate across the tech industry – not just social media

🔦Research 🔗in comments pic.twitter.com/PUYsuTsAaJ— Childlight – Global Child Safety Institute (@Childlight_) April 10, 2025

And more importantly, whether knowingly or by omission, tech companies and online payment platforms are profiting off of this industry. In reality, we will never know where X stands on this.

The content moderation team at X has taken down several accounts after they have been reported, but the problem lies in the mechanisms that make it easy for criminals to create a new account within 1.5 minutes — less if it’s automated.

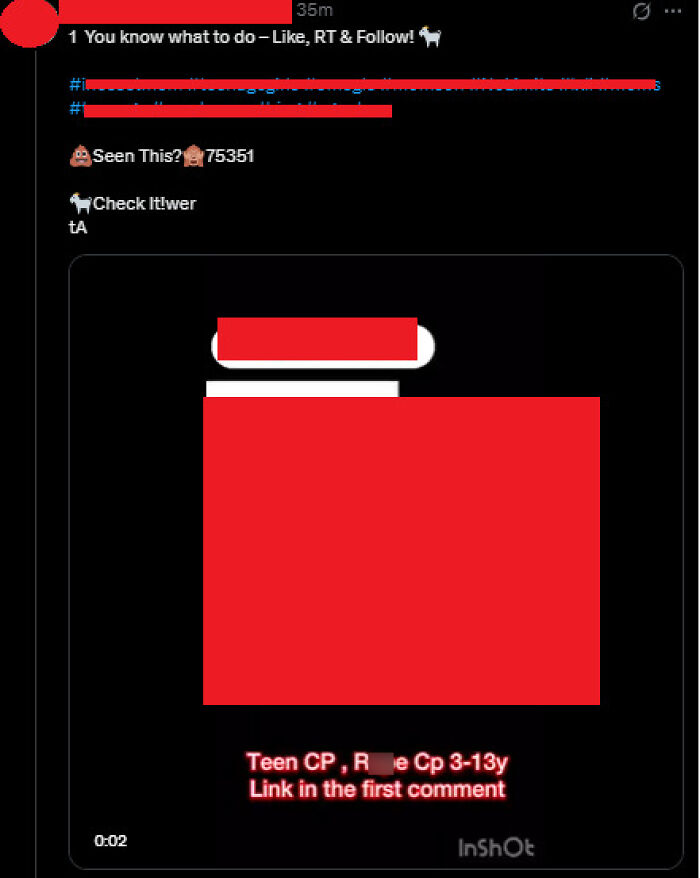

Nazari showed me just how easy it was to find these accounts based on their behavior. These accounts were created in bulk using a series of throwaway accounts that are disposed of quickly.

Saman Nazari showed BP Daily how easy it was to create bulk accounts that post links to CSAM darkweb pages on X

Image credits: Alliance4Europe

Then, the perpetrators use another set of accounts to share those links on posts by regular users and take advantage of popular hashtags, in a process called hashtag hijacking.

Nazari then showed me a program that can automatically identify recent posts based on the parameters input. “They [X] could easily block [CSAM accounts] if they focus on addressing the behavior, figuring out how these techniques work and what the characteristics of operation are,” Nazari said.

“Another even easier way is just to block the IP addresses that are being used to bulk credit accounts. Adding additional verification steps, like phone numbers, could be another step that they could take to hinder these types of mass creation,” he added.

Nazari and his team also noted another inconsistency. The accounts used would often change names, for instance, with many of them having Vietnamese names one day and British names the next. This could further allude to the fact that many of these CSAM accounts are being bought, in which case, X needs to address this issue.

Image credits: Alliance4Europe

However, X did not respond to the report or data shared with them by Alliance4Europe. A quick search shows that several posts are still on the platform.

In June, another such group, Thorn, a Californian NGO that shares its technology with social media platforms to detect and address CSAM, told NBC News that X had stopped paying recent invoices for their work. X told them they are moving towards using their in-house technology to address the issue.

The most recent figures, as of January 2024, show a 30% staff drop in trust and safety staff, 80% in safety engineers, 52% in full-time moderators, and a 12% staff drop in contract moderators. X Corp had then announced plans to hire an additional staff of 100 full-time content moderators.

Image credits: Kevin Dietsch/Getty Images

X has rapidly removed CSAM content, leading to a decrease in the intensity of these activities. X’s age verification system has also blocked users from accessing content, which has also hampered the hashtag hijacking process, Alliance4Europe reported in their study.

In June this year, X announced their launch of a new CSAM hash matching system to quickly and safely check media.

“When we identify apparent CSAM, we act swiftly to suspend the account and report the content to @NCMEC [National Center for Missing & Exploited Children],” X said in a post. “[NCMEC] works with law enforcement globally to pursue justice and protect children.”

“In 2024, X sent 686,176 reports to the National Center for Missing and Exploited Children (NCMEC) and suspended 4,572,486 accounts. Between July and October 2024, @NCMEC received 94 arrests and 1 conviction thanks to this partnership,” the company further said.

X has taken several steps to reduce CSAM, but has not fully stopped it

At X, we have zero tolerance for child sexual exploitation in any form. Until recently, we leveraged partnerships that helped us along the way. We are proud to provide an important update on our continuous work detecting Child Sexual Abuse Material (CSAM) content, announcing…

— Safety (@Safety) June 18, 2025

While X has taken several steps to identify and reduce CSAM, it has not fully stopped it.

Another trend, as highlighted by NBC News, is the use of the Communities feature on X to post into groups of tens of thousands of people devoted to topics like incest.

Many of these topics are shared using hashtags, and despite certain hashtags being popularly used, they are not taken off the platform.

“If these hashtags have been there for a while, if they are being completely taken over, there must be a point in blocking them for a moment and then trying to stop the network,” Nazari recommended.

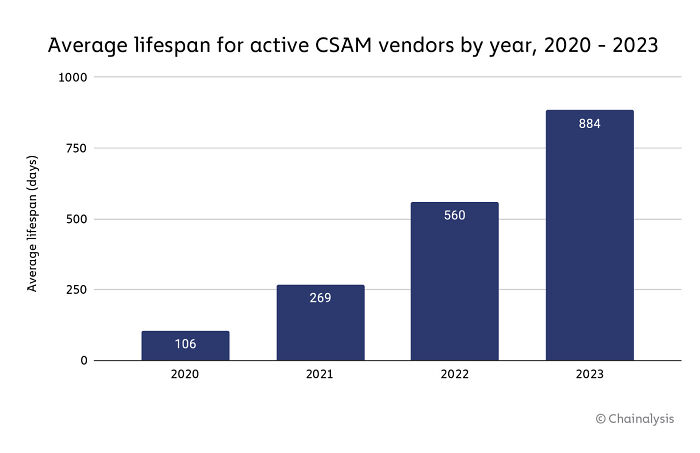

Image credits: ChainAnalysis

Blocklists are lists of known CSAM that can be blocked across major internet service providers. Many NGOs have compiled such lists, with the Internet Watch Foundationfinding 142,789 such unique URLs in a year in 2024.

Childlight noted there are five attempts per second globally to access CSAM that have already been placed on these blocklists.

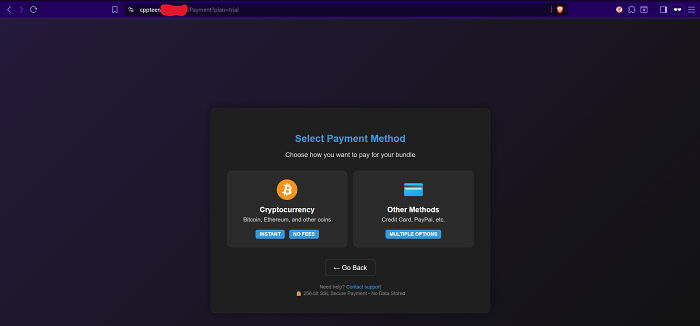

This goes to show that child sexual exploitation online is an organized economy with supply, distribution, payment, and demand. Bitcoin is a big facilitator in this ecosystem, Alliance4Europe noted, along with PayPal. Unless X disrupts the entire ecosystem, Elon Musk’s “zero tolerance” rhetoric remains hollow.

Image credits: Alliance4Europe

While Musk promised zero tolerance for CSAM, he also bought Twitter under his guiding philosophy, “Freedom of Speech, Not Freedom of Reach,” which favors free speech over moderation.

Other major platforms like Meta, YouTube, and TikTok have provided detailed CSAM transparency reports, but X has released only minimal information, making it arguably the least transparent major social network regarding CSAM enforcement.

Ultimately, the persistence of CSAM on X highlights that platform policies alone are insufficient. As long as X does not address the underlying infrastructural gaps that allow for the content to be shared, it remains a weak link in the global fight against child sexual exploitation — no matter how many promises Musk makes.

Poll Question

Thanks! Check out the results:

The man who bankrolled a p**o r@pist for president owns a site that facilitates and encourages said behavior. That’s not surprising

The man who bankrolled a p**o r@pist for president owns a site that facilitates and encourages said behavior. That’s not surprising

18

1